Ask Jamie chatbot taken down from MOH site after gaffes

Users bemused after it dished out family planning advice when asked about Covid-19 matters

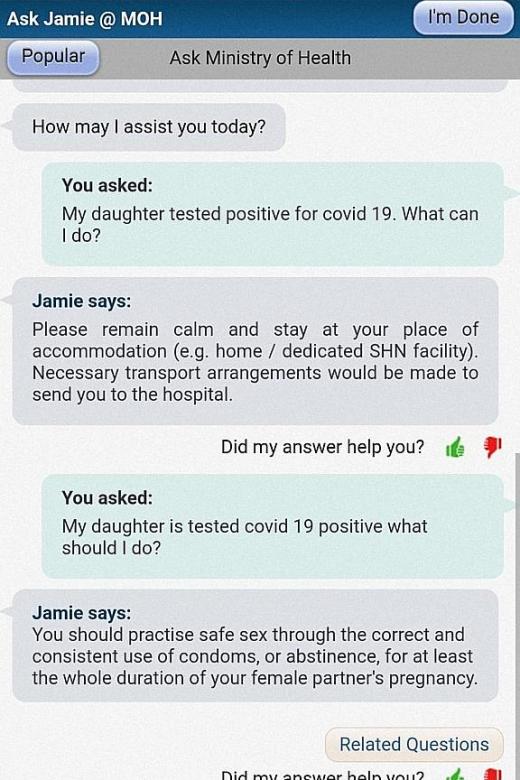

Gaffes by a chatbot on the Ministry of Health's (MOH) website left some Internet users bemused - and many tickled - at the weekend after it dished out family planning advice when asked about Covid-19 matters.

Screenshots of the Ask Jamie chatbot's mistake have been shared online, such as on discussion board Reddit, and have drawn mostly amused responses from netizens.

When the chatbot was asked "My daughter is tested (sic) Covid-19 positive what should I do?", it replied: "You should practise safe sex through the correct and consistent use of condoms, or abstinence, for at least the whole duration of your female partner's pregnancy." Other variations include using "son" instead of "daughter" in the question.

But the chatbot would provide the appropriate advice - to remain calm and stay at the person's place of accommodation - if the question was phrased differently.

As at yesterday, Ask Jamie had been taken down from MOH's website.

Developed by the Government Technology Agency, the Ask Jamie virtual assistant uses natural language processing technology to "understand" a question and pull up the most relevant answer from a government agency's website or database.

A chatbot must be "trained" to learn human speech and understand different ways a question may be posed.

This can be tedious, particularly if done manually by human staff reviewing thousands of questions and responses, said Mr Jude Tan, chief commercial officer of artificial intelligence firm INTNT.ai.

"You have questions which are semantically alike, but pointing to two different answers," he added, referring to Ask Jamie's mistake.

MISINTERPRETED

Some netizens have tried to explain the gaffe, stating that the chatbot had detected certain words in the question and misinterpreted it as one about sexually transmitted diseases.

Wrong answers - termed "false positives" - can happen in about a third of an untrained chatbot's responses, Mr Tan said.

As a chatbot becomes more complex and its database of responses grows, the likelihood of it giving a wrong response also increases.

A chatbot that cannot provide the correct answers within two or three questions from users reflects badly on a company, Mr Tan said.

"I know a couple of companies who had launched their bots and... shut them down two or three years later because of the negativity surrounding the bots, (which) affected their branding."

The Ask Jamie chatbot has been implemented on more than 70 government agency websites.

It is customised to answer questions about its host agencies' services and other relevant topics, but has also been upgraded to display answers from the most relevant agency.

While it has been taken down on the MOH website, a check by The Straits Times showed that the chatbot is still present on others.

ST has contacted MOH for comment.

Get The New Paper on your phone with the free TNP app. Download from the Apple App Store or Google Play Store now